How to Set Up and Run DeepSeek-R1 Locally With Ollama

Ever wanted to run your own AI chatbot without relying on the cloud? With Ollama, you can download and chat with powerful LLMs (Large Language Models) directly from your command line—no internet required after setup!

In this guide, we’ll walk you through installing Ollama, downloading the DeepSeek-R1 1.5B model (a lightweight yet smart AI), and chatting with it effortlessly on your PC. Whether you’re experimenting with AI or need an offline assistant, this setup is quick and easy.

Let’s get started! 🚀

Downloading and Installing Ollama

To get started with running a local LLM on your machine, you first need to install Ollama, the tool that makes it all possible. Follow these simple steps:

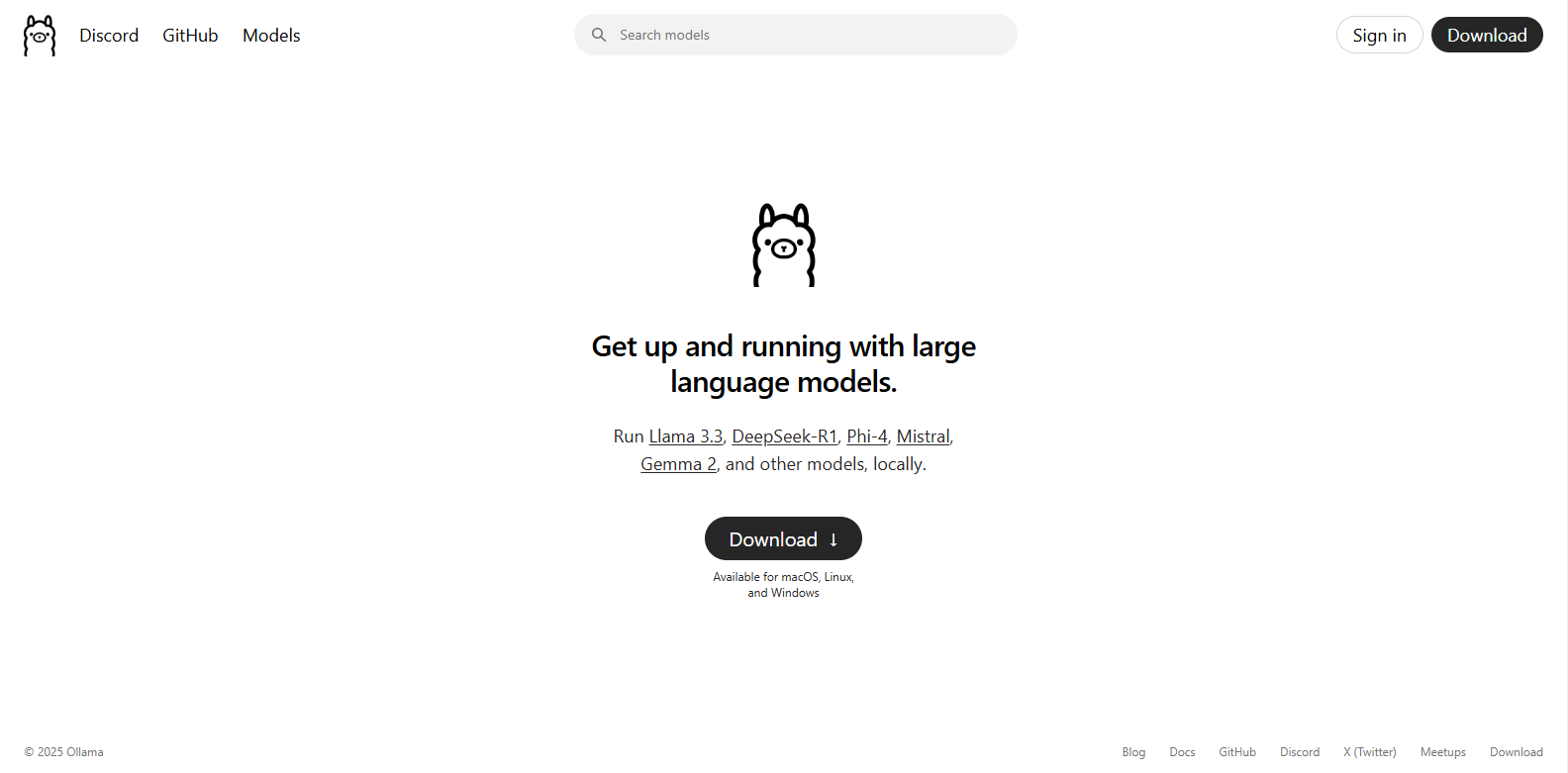

1. Accessing the Ollama Website

Ollama provides an easy-to-use interface for managing and running AI models locally. To download it:

- Open your web browser and go to Ollama’s official website.

- You’ll find a “Download” button on the homepage—click it.

2. Downloading the Installer for Your OS

Ollama supports multiple operating systems, so choose the version that matches yours:

- Windows: Download the

.exeinstaller. - macOS: Download the

.pkgfile. - Linux: Follow the installation instructions provided on the site.

Once downloaded, move on to the installation process.

3. Installing Ollama on Your System

Now, let’s install the downloaded file:

- Windows: Run the

.exefile and follow the on-screen instructions. The installation will set up Ollama automatically. - macOS: Open the

.pkgfile and go through the installation wizard. - Linux: Use the provided installation script or package manager commands from the Ollama website.

After installation, Ollama is now available on your system, but to ensure smooth usage, we need to add it to the system variables so it can be accessed from anywhere in the terminal.

Adding Ollama to System Variables

Now that Ollama is installed, the next step is to ensure it can be accessed from anywhere in the terminal or command prompt. This is done by adding it to your system’s environment variables (also known as the system PATH).

1. Why Adding It to System Variables Is Important

By default, some installations don’t automatically add Ollama to the system PATH. If this happens, you may see an error like:

'ollama' is not recognized as an internal or external commandAdding Ollama to the system variables allows you to run its commands (ollama pull, ollama run, etc.) from any directory in the terminal instead of navigating to its installation folder each time.

2. Steps to Add Ollama to System Variables

For Windows:

Locate Ollama’s installation path. It’s usually found in (it might take a while to find it so use teh search bar if you cant find yours here):

C:\Program Files\Ollama- Copy the folder path.

- Open the Start Menu, search for Environment Variables, and click Edit the system environment variables.

- In the System Properties window, click Environment Variables.

- Under System Variables, find and select

Path, then click Edit. - Click New, paste the copied Ollama path, and click OK to save.

- Restart your command prompt or PC for changes to take effect.

For Linux:

Open a terminal and locate the Ollama binary. If you installed it via a package manager, the path is likely:

/usr/local/bin/ollamaAdd it to your

PATHvariable by running:echo 'export PATH=$PATH:/usr/local/bin' >> ~/.bashrc source ~/.bashrcIf you're using zsh, replace

~/.bashrcwith~/.zshrc.

3. Verifying the Installation

To confirm that Ollama is correctly set up, open a terminal or command prompt and run:

ollama --versionIf the installation is successful, you should see the installed version of Ollama displayed.

Now that Ollama is accessible from anywhere, let’s move on to choosing the right LLM to install!

Choosing an LLM Model

With Ollama set up, the next step is selecting a language model (LLM) to run. Ollama provides a variety of models for different use cases, from general chatbots to coding assistants. Let’s explore how to find them and why we’ll be using DeepSeek-R1 1.5B.

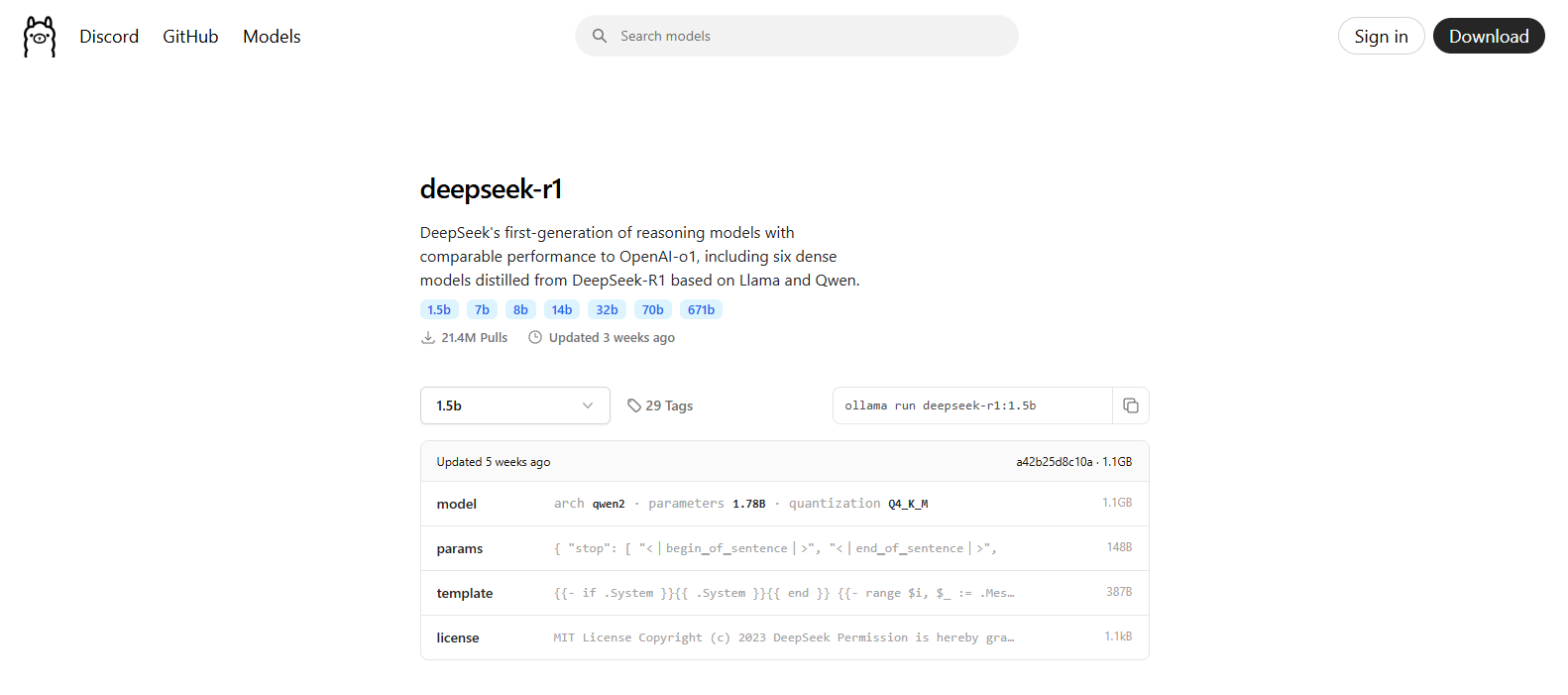

1. Browsing Available Models on Ollama’s Website

Ollama hosts a growing list of AI models that you can easily install and run. To browse available models:

- Visit Ollama’s Models Page.

- You’ll find various models like Llama3, Mistral, DeepSeek, CodeLlama, and more.

- Click on a model to see details like its capabilities, size, and installation command.

Each model serves a different purpose—some are optimized for speed, some for reasoning, and others for coding or writing assistance.

2. Why DeepSeek-R1 1.5B?

For this guide, we’ll be installing DeepSeek-R1 1.5B, and here’s why:

✅ Compact Size – At 1.1GB, it’s relatively lightweight compared to larger models, making it ideal for devices with modest specs.

✅ Good Conversational Capabilities – Unlike some smaller models that respond instantly but lack depth, DeepSeek-R1 1.5B has thinking ability before answering, leading to better responses.

✅ Smooth Performance – Since it’s not too resource-intensive, it can run efficiently on most personal computers without requiring high-end hardware.

Search for DeepSeek-R1 using the search bar and select it from the list, Click the dropdown to select the parameter size you want (you can go higher if you feel your PC can run it), then copy the command that pulls the model from the site, it should look like ollama run deepseek-r1:1.5b.

Now that we’ve chosen our model, the next step is installing it.

Installing DeepSeek-R1 1.5B

Now that we’ve chosen DeepSeek-R1 1.5B, it’s time to install it. Ollama makes this process super simple—just one command, and you're good to go!

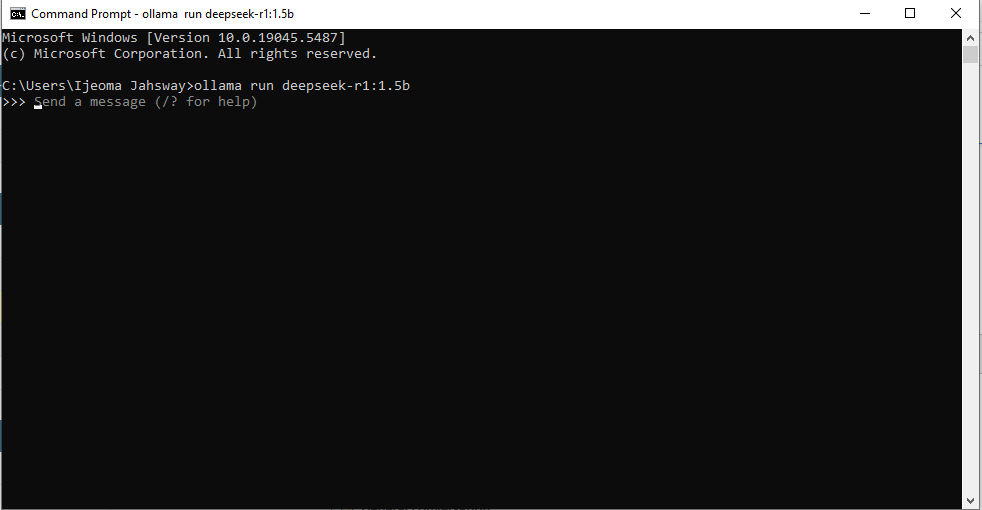

1. Running the Command to Pull the Model

To install and run DeepSeek-R1 1.5B, open your Command Prompt (CMD) or terminal and type:

ollama run deepseek-r1:1.5bOnce you hit Enter, Ollama will start downloading the model.

2. Understanding the Download Size and Wait Time

- The DeepSeek-R1 1.5B model is about 1.1GB in size.

- The download speed depends on your internet connection, so it may take a few minutes.

- Once downloaded, the model will automatically launch, and you’ll see a chat interface where you can start interacting with the AI immediately.

After installation, you’re ready to chat! Let’s move on to testing the model.

Chatting with the LLM

Now that DeepSeek-R1 1.5B is installed, let’s put it to the test!

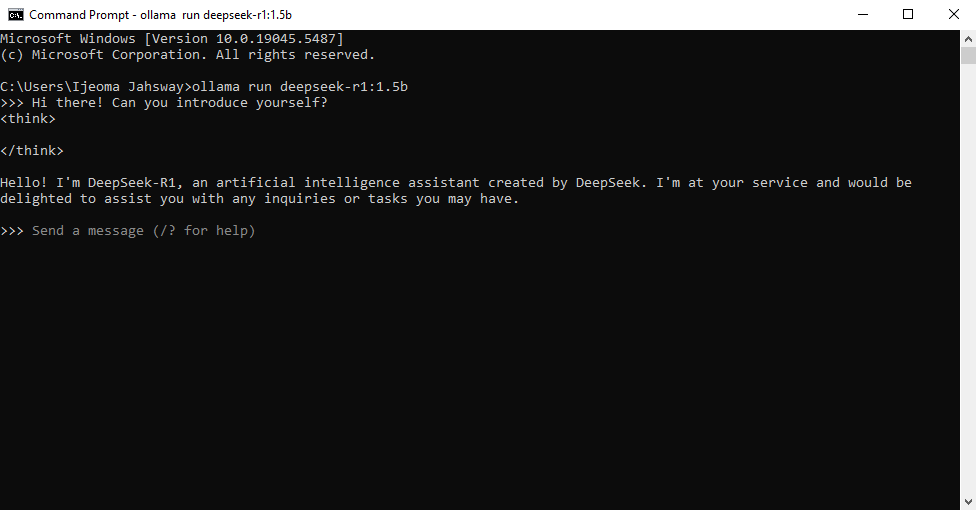

1. Running the Model in the Command Line

To start a chat with the model at any time, simply open CMD or terminal and run:

ollama run deepseek-r1:1.5bThis will launch the LLM, and you’ll see a chat interface where you can start typing messages.

2. Example Interactions to Test Its Capabilities

Once the model is running, try out some prompts to see how it responds:

📝 General conversation:

You: Hi there! Can you introduce yourself?

🧠 Testing its thinking ability:

You: If a train leaves at 3 PM traveling 60 km/h, how far will it go in 2.5 hours? 💡 Creative writing test:

You: Write a short, futuristic sci-fi story in 3 sentences. These are just a few ways to test the model’s responses and reasoning skills.

3. Closing the Chat

When you’re done chatting, type:

/byeThis will exit the chat and return you to the command prompt.

Tips on Usage

You can run the model anytime using the same command:

ollama run deepseek-r1:1.5b- Ollama allows you to download multiple models for different use cases. Just visit Ollama’s model library and install any model you like.

- Model size matters!

- Smaller models (like 1B-3B parameters) run smoothly on most PCs.

- Larger models (7B, 13B, or 65B parameters) require a powerful GPU and lots of RAM. Running a model beyond your PC’s capacity will cause lag and slow performance.

With this setup, you now have your own locally running AI chatbot that works offline, without relying on cloud services! 🚀

And that’s it—you’re all set to explore the world of AI with Ollama! 🎉

Let’s Talk!

You’re now set up with DeepSeek-R1 1.5B on Ollama and can chat with your own AI model right from your command line! 🚀

What do you think? Have you tried other models? What are your thoughts on running LLMs locally vs. using cloud-based AI? Drop your questions and experiences in the comments below!

💬 Got stuck during installation? Let me know—I’d be happy to help troubleshoot!

🔍 Looking for more LLM options? Check out the Ollama model library and share your recommendations, or drop a comment and I'll recommend some models built for advanced coding logic!